At CES 2026, NVIDIA revealed its next-generation AI computing platform, Vera Rubin. This is the company’s most ambitious innovation yet. Founder and CEO Jensen Huang made the announcement on January 5 in Las Vegas. The platform represents a major leap in building, training, and deploying AI systems at scale.

NVIDIA named the platform after Vera Florence Cooper Rubin, a famous American astronomer. NVIDIA continues its tradition of honoring influential scientists. The Vera Rubin platform also shows the company’s vision for the future of AI computing infrastructure. Performance, efficiency, and integration are key to this vision.

NVIDIA’s Vision for the Next Phase of AI Computing

Artificial intelligence has moved beyond experimentation and into real-world deployment across industries. From generative AI and autonomous systems to scientific research and enterprise applications, the demand for faster and more efficient AI computing has grown exponentially. NVIDIA’s announcement at CES 2026 directly addresses this shift.

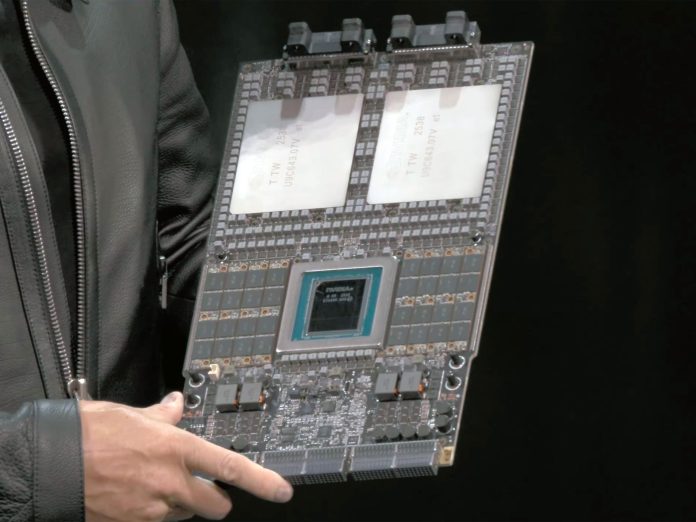

The Vera Rubin platform is not just another chip release. Instead, it represents a tightly integrated AI computing architecture designed to support massive AI workloads that traditional systems struggle to handle. NVIDIA is positioning Rubin as the successor to its Blackwell architecture, pushing boundaries in performance, scalability, and energy efficiency.

By focusing on an end-to-end approach, combining GPUs, CPUs, memory, and networking into a unified system, NVIDIA aims to simplify AI infrastructure while delivering unprecedented computing power.

What Is the Vera Rubin AI Computing Platform?

The Vera Rubin platform is NVIDIA’s next-generation AI computing architecture built specifically for large-scale artificial intelligence workloads. Unlike traditional standalone processors, NVIDIA designed Rubin as a co-designed system. All its components work together seamlessly.

At its core, the platform integrates:

- High-performance Rubin GPUs

- A custom Vera CPU

- Advanced memory technologies

- High-speed networking and interconnects

This design allows data to move more efficiently between components, reducing bottlenecks that often slow down AI training and inference. The result is faster model development, improved efficiency, and better scalability for enterprises and cloud providers.

NVIDIA emphasized that Rubin can handle the growing complexity of modern AI models, including those with trillions of parameters. This makes it particularly suitable for generative AI, large language models, and scientific simulations.

Product Details: A New Architecture Built for Scale

One of the most notable aspects of the Vera Rubin platform is its rack-scale design philosophy. Instead of focusing on individual chips, NVIDIA has designed Rubin to operate as part of large AI systems deployed in data centers.

NVIDIA expects the Rubin GPUs to deliver significant performance improvements over previous generations. They offer higher throughput while using less power per computation. This balance between power and efficiency is critical as AI workloads become more resource-intensive.

Additionally, the platform introduces next-generation memory technologies that allow AI models to access and process massive datasets more quickly. Faster memory access means reduced training times and more responsive AI applications, which is especially important for real-time and enterprise use cases.

Another key feature is the platform’s ability to support large-scale parallel processing. By allowing multiple GPUs and CPUs to work together as a unified system, Rubin enables AI developers to scale their workloads without redesigning their infrastructure.

Product Details: Integrated Design for Smarter AI Systems

Beyond raw performance, the Vera Rubin platform focuses heavily on integration and efficiency. NVIDIA has designed the system so that computing, memory, and networking components communicate with minimal latency. This tight integration improves overall system responsiveness and reduces energy waste.

The inclusion of the custom Vera CPU alongside Rubin GPUs allows for better workload management. While GPUs handle intensive AI computations, the CPU manages data orchestration and system tasks, ensuring smoother operation across the entire platform.

NVIDIA has also optimized the platform for advanced AI workloads such as training, inference, and simulation. This makes Rubin versatile enough to support industries ranging from healthcare and finance to climate research and autonomous vehicles.

By treating AI computing as a complete ecosystem rather than isolated components, NVIDIA is redefining how future AI infrastructure will be designed and deployed.

Why This Matters for the AI Industry

NVIDIA’s announcement comes at a time when AI adoption is accelerating across the globe. Enterprises are moving from pilot projects to full-scale deployments, and they need infrastructure that can keep up with growing demands.

The Vera Rubin platform addresses several critical challenges:

- Scalability – Designed for large data centers and AI supercomputers

- Efficiency – Improved performance per watt to reduce operational costs

- Flexibility – Suitable for training and inference across industries

- Future readiness – Built to support increasingly complex AI models

For cloud service providers and enterprises, this means faster innovation cycles and lower barriers to deploying advanced AI solutions. For researchers, it opens the door to tackling more complex problems in fields like physics, medicine, and climate science.

NVIDIA’s CES 2026 Moment

CES has long been a stage for transformative technology announcements, and NVIDIA’s unveiling of Vera Rubin was one of the most significant highlights of CES 2026. Jensen Huang’s keynote emphasized the company’s belief that AI computing will be foundational to nearly every industry in the coming years.

By introducing Rubin at CES, NVIDIA reinforced its leadership role in shaping the AI landscape. The announcement also sent a clear message to competitors: the future of AI computing lies in integrated, scalable platforms rather than standalone components.

The naming of the platform after Vera Rubin also reflects NVIDIA’s broader philosophy, combining scientific inspiration with technological innovation to drive progress.

Expected Launch Timeline and Availability

While the Vera Rubin platform has been officially announced, NVIDIA has clarified that production is nearing completion, with a broader rollout expected in the second half of 2026.

This timeline aligns with NVIDIA’s strategy of allowing developers, partners, and cloud providers time to prepare for deployment. Once launched, Rubin is expected to power next-generation AI data centers and supercomputing systems worldwide.

Early adoption is likely to come from hyperscale cloud providers, research institutions, and large enterprises that require cutting-edge AI computing infrastructure.

Conclusion:

NVIDIA’s announcement of the Vera Rubin platform at CES 2026 marks a defining moment in the evolution of AI computing. By moving beyond traditional chip design and focusing on a fully integrated AI computing platform, NVIDIA is addressing the real-world challenges of scale, efficiency, and performance. The platform is expected to launch in the second half of 2026 and is set to play a crucial role in powering the next wave of AI innovation.